‘Back to Basics for Startups’ – AI Regulation in the EU

What’s proposed?

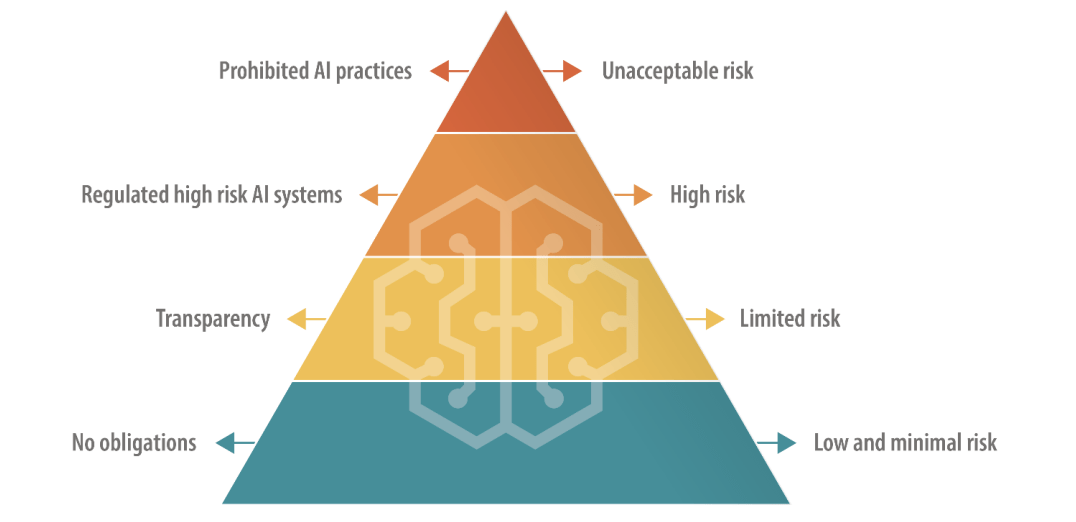

The European Commission has proposed a new Artificial Intelligence Act (AI Act) to regulate the development and use of AI systems in the European Union. The proposed legislation outlines a risk-based approach to AI, with four levels of risk categorisation: unacceptable risk AI, high-risk AI, limited risk AI, and minimal risk AI. If adopted, this would be the first AI law by a major regulator and could set a global blueprint.

Where are we in the legislative process?

The Council of the European Union has already adopted its common position, and the European Parliament is currently discussing the proposal. Although the final text is still to be discussed between the three institutions once the Parliament has agreed on a final version, the Act should apply to both public and private actors inside and outside the EU (as long as the AI system is placed on the EU market or affects people in the EU) and can concern both providers (e.g. a developer of a CV-screening tool) and users/deployers (e.g. a bank buying a CV-screening tool) of AI systems.

How will it impact startups?

The proposed AI Act may have a significant impact on startups in the EU, particularly those providing or deploying high-risk AI systems: they will need to comply with the strict requirements outlined in the legislation: risk assessment, high quality data sets, human oversight, and more. However, the AI Act also includes provisions that aim to support innovation, such as AI regulatory sandboxes. Startups in the EU will need to carefully consider the impact of the AI Act on their operations and ensure that they are compliant with the regulations.

What about General Purpose AI and Generative AI?

As ChatGPT has triggered debates in the EU and in the rest of the world, policymakers as well as industry players are trying to make sense out of what is general purpose AI (GPAI), foundation models, and generative AI (GAI) and whether or not to include obligations to those systems into the AI Act. These three types of systems can be explained as such:

- GPAI systems are AI systems designed to perform generic and widely applicable functions, such as language processing, image and speech recognition, pattern detection, and question answering. GPAI systems are a broad category covering both foundational models as well as generative AI systems, which are more specific and targeted.

- Foundational models are pre-trained with data that serve as a foundation for more specialised AI systems. Contrary to GPAI systems, foundational models have already been trained on large datasets and can be fine-tuned for specific tasks such as language translation, image recognition, or speech-to-text.

- Generative AI systems use AI algorithms to generate new content, such as text, images, or music. They can produce content that is similar to what a human might create, but they do so autonomously without human input. They are the most specific kind of GPAI systems.

Consequently, one can observe that not all general purpose AI systems are generative AI systems (since not all GPAI systems involve generating new content): an AI system that recognises speech patterns and translates them into text is a GPAI system, but is not a generative AI system. Following the same logic, all generative AI systems are not general purpose: a generative AI system that generates realistic images of faces is only designed to perform one specific task. Taking ChatGPT as an example, it can be defined as a GPAI system (performing various tasks and with no specific purpose), based on a foundational model (trained on vast amounts of data) that is also a generative AI system (generating new content).

Even though the AI Act’s proposal doesn’t mention such AI systems, the text as debated by relevant Members of the European Parliament explicitly refers to GPAI, foundational models, and generative AI, and calls for specific obligations for each of them. These provisions, if accepted, could have negative consequences for startups in the EU, particularly considering that 45% of the startups (according to a survey) consider their AI systems to be GPAI. It is therefore important that GPAI is not overly regulated as many startups rely on the scale and transfer learning capabilities of GPAI systems to develop and deliver their AI products and services across a range of fields in the AI value chain. Overregulation could indeed create significant entry barriers and limit the supply of GPAI systems available for startups-users.

What’s next?

The European Parliament’s relevant committees are expected to vote on 11 May whereas the vote plenary could take place around mid-June. As soon as it’s done, the Parliament will start negotiating with the Council of the EU and the European Commission (in trilogues) and order for EU institutions to agree on a final version of the text. We’ll be sure to keep you posted!